Orchestrating Ecosystem Data: 360insights + Partner Score + Twogether

Expert advice from David Ward, James Hodgekinson, Derek Larson, Jason Atkins, and Seb Tyack.

Introduction

Ecosystems, data, and AI converge to redefine how partnerships create value. The choices you make now about data architecture, measurement, and orchestration will determine whether your ecosystem becomes a competitive moat or a fragmented cost center.

Those choices include how you define your model, your canonical schema and semantic layer, where you allow data to reside versus where you share transformed views, and how frequently you capture partner signals — from transactional revenue to short, contextual micro‑feedback.

Get the orchestration model right — clear data contracts, repeatable ETL patterns, and well‑scoped playbooks — and you enable personalization, early warning systems, efficient enablement spend, and safe AI‑assisted actions.

Get it wrong and you’ll inherit duplicated integrations, stale reports, brittle automations, and unhappy partners. This article is dedicated to showing you the orchestration strategy, systems, and partnerships the 360insights Ecosystem is building to create a durable moat!

Get ready! AI will move from advice to action — an operational co‑pilot that executes with human guardrails -Jason Atkins

Table of Contents

- Why a data-first orchestration matters

- Partner Score: measuring relationship health as a leading indicator

- PartnerView: democratizing partner intelligence and program rules

- How Spalding Ridge stitches data where it lives

- Composability and standards: design patterns that scale

- When AI agents can safely run the engine: a practical roadmap

- How to start: an actionable 90-day plan

- Common pitfalls and how to avoid them

- Metrics that matter for ecosystem performance

- Security, privacy, and trust in ecosystem data

- Scaling partner experiences across geographies and languages

- Integration checklist for your tech stack

- Vendor and partner playbook: roles and responsibilities

- FAQs

- Conclusion

Why 360insights Partnered with Partner Score

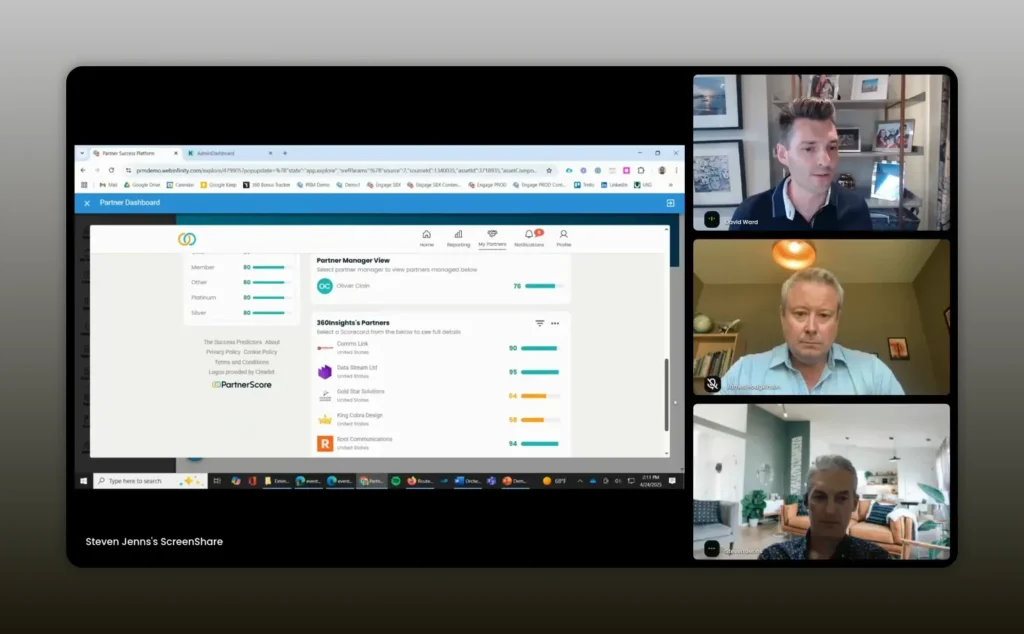

David launched Partner Score to answer a deceptively simple B2B question: why do some partners flourish while others stagnate despite similar incentives and investments? The insight that broke the pattern is that relationship health is the number-one leading indicator of partnership success.

Relationship quality predicts revenue outcomes more reliably than any single commercial metric -David Ward

Partner Score treats relationship data as a continuous feedback stream, not an annual checkbox. Think of it like an Uber rating for partnerships: short, frequent prompts that capture the sentiment of the team working the relationship. That stream becomes a third dataset alongside revenue and engagement.

Key elements of a Partner Score approach

- Network lens: measure relationships not just vendor-to-partner but partner-to-partner and multi-vendor interactions.

- Micro-feedback: embed short ratings at the moment of interaction — onboarding, deal close, MDF activity — rather than long, infrequent surveys.

- Scorecards: build live partner scorecards that combine seven relationship predictors such as commitment, communication quality, conflict levels, and operational friction.

- Actionability: tie alerts into workflows so partner managers get notified early and can intervene before revenue drops.

- AI-driven categorization: use models to structure open comments into themes and trends across geographies and languages.

Why this matters to you: if you couple relationship signals with commercial data, you get early warning signals and an intelligent way to prioritize scarce enablement resources. You stop chasing lagging revenue metrics and start fixing the root causes that prevent partners from performing.

Learn more about 360insights integration and partneship with Partner Score here.

Why 360insights Partnered with Twogether

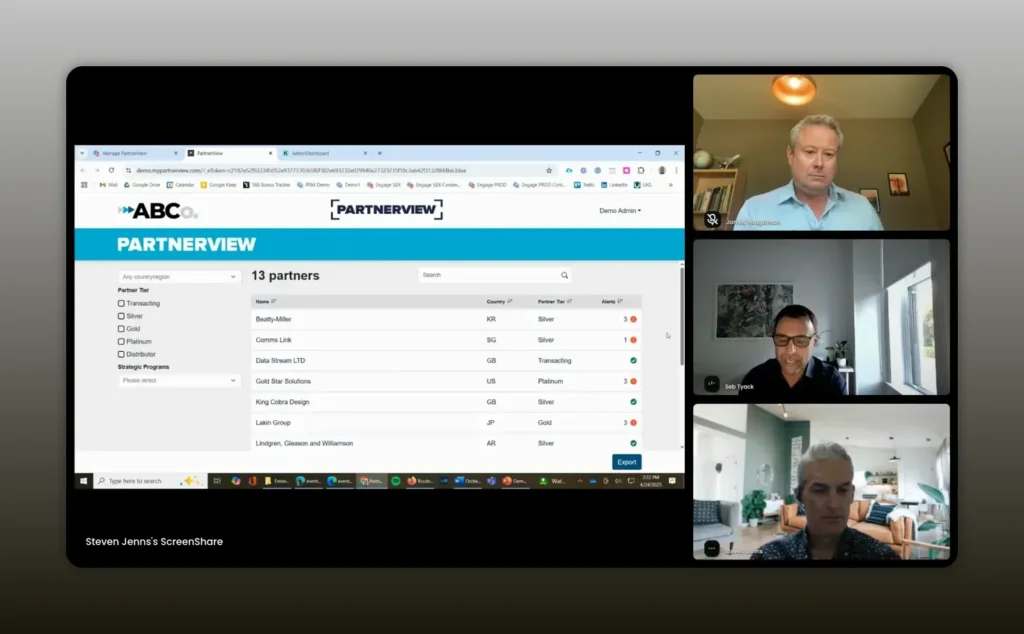

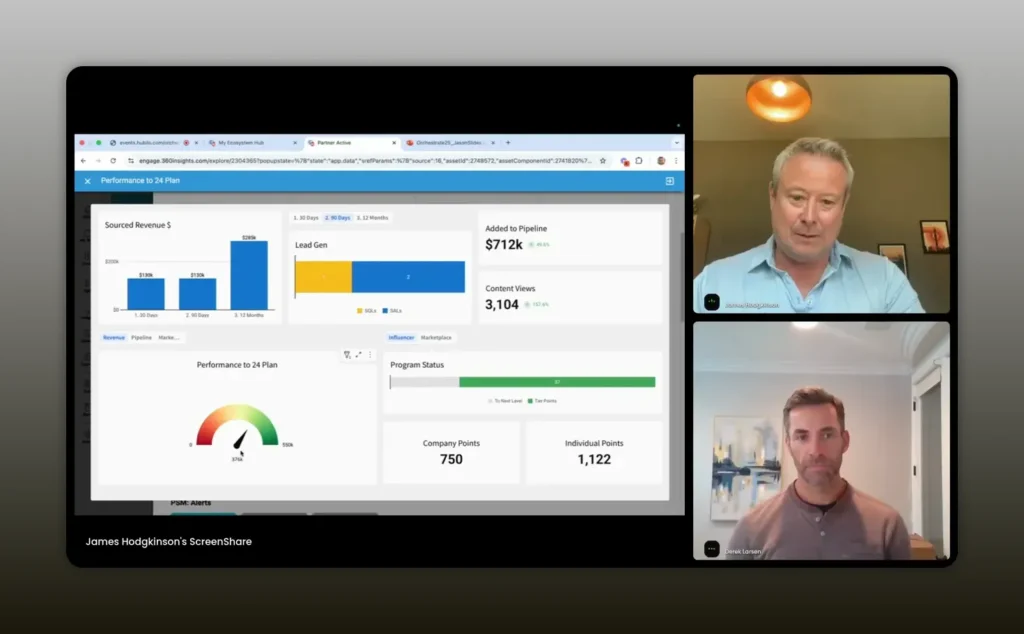

Seb Tayak and the Twogether team built PartnerView to make partner intelligence configurable and consumable by both partner managers and partners themselves. PartnerView is an example of bringing the semantic layer to life: it lets you declare program logic, tier rules, and performance buckets without heavy engineering effort.

Expose the same rules and data to partners you use internally; transparency reduces friction and drives productivity -Seb Tayak

How PartnerView helps you operationalize data

- Configurable dashboards: tailor dashboards by partner type, tier, or program so every partner sees exactly what matters to them.

- Program versions and cloning: test different program rules and simulate how they would affect partner attainment using real data feeds.

- Meta fields and tags: create strategic groups, geographies, or accelerator cohorts and measure them separately.

- Data connectors: feed revenue, LMS completion, deal registration, PRM, and ERP data into a unified partner view.

- Partner self-serve: partners can see attainment, benefits, competencies, and next steps in one place.

Benefits you’ll see quickly

- Faster adoption of partner programs because partners understand entitlements and next steps.

- Reduced administrative lift for partner account managers who can focus on growth activities instead of chasing data.

- Better internal alignment — sales, rewards, enablement, and operations all look at the same single source of truth.

Learn more about 360insights integration and partneship with Twogether here.

Why 360insights Partnered with Spalding Ridge

Derek made a crucial point: you cannot expect every customer to move their data to a single platform. Data gravity, security policies, and cost considerations mean the practical approach is to stitch and transform.

Let the data sit where it is; create repeatable integration patterns that turn disparate sources into trusted pipelines -Derek Larson

Architectural principles that make stitching practical

- Semantic layer first: define a canonical model that maps fields from CRMs, PRMs, LMS, MDF logs, and incentives systems into consistent entities such as partner, opportunity, and program.

- Repeatable ETL patterns: use tools like dbt for transformations and Snowflake or equivalent for secured data sharing.

- Trusted primitives: establish data contracts for frequency, freshness, and lineage so downstream dashboards and agents can rely on them.

- Proven connectors: maintain a library of adapters (CRM to canonical model, LMS to canonical model, incentives ledger to canonical model).

- Orchestration over relocation: orchestrate views and workflows without forcing a full migration.

This approach yields two immediate advantages. First, it reduces friction for customers who have mature investments in BI and data platforms. Second, it enables a composable ecosystem where each partner or vendor can contribute its piece of intelligence to the canonical picture.

Learn more about 360insights integration and partnership with Spalding Ridge here.

Composability and standards: design patterns that scale

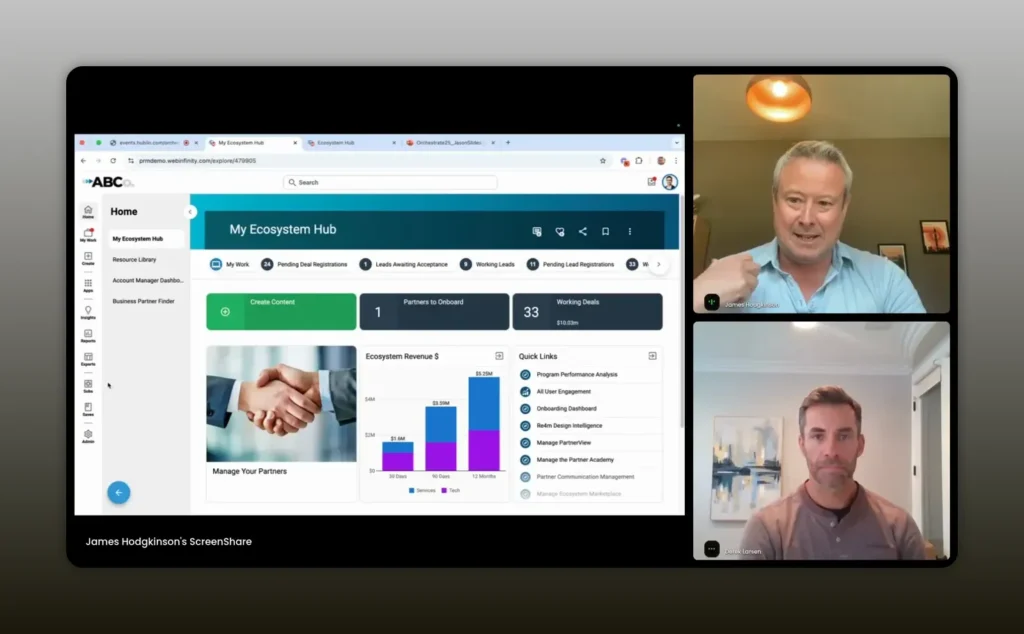

James and Derek emphasized composability as the operating model. Composability means picking small, well-defined building blocks — partner scoring, deal registration, MDF management, training progress — and enabling them to be assembled into complete experiences.

Standards unlock exponential scaling — pre-built data schemas and repeatable design patterns make enterprise rollouts practical -Derek Larson

Elements of a composable approach

- Domain-specific modules: keep specialized capabilities modular — one module for incentives, one for partner health, one for account mapping.

- Semantic contracts: publish a schema that all modules adhere to so they can be woven together into dashboards and agent inputs.

- Experience composition: allow a single storefront to present components from multiple vendors as a single pane for the partner.

- Persona-driven views: render the same underlying data with different UIs depending on whether the viewer is an executive, a partner account manager, or the partner.

Practical consequences

When you standardize the data contracts and commoditize common dashboards, you reduce bespoke engineering for every new customer. Pre-built boards and standard datasets let you move from project mode to product mode — faster deployments, predictable outcomes, and easier upgrades.

When AI agents can safely run the engine: a practical roadmap

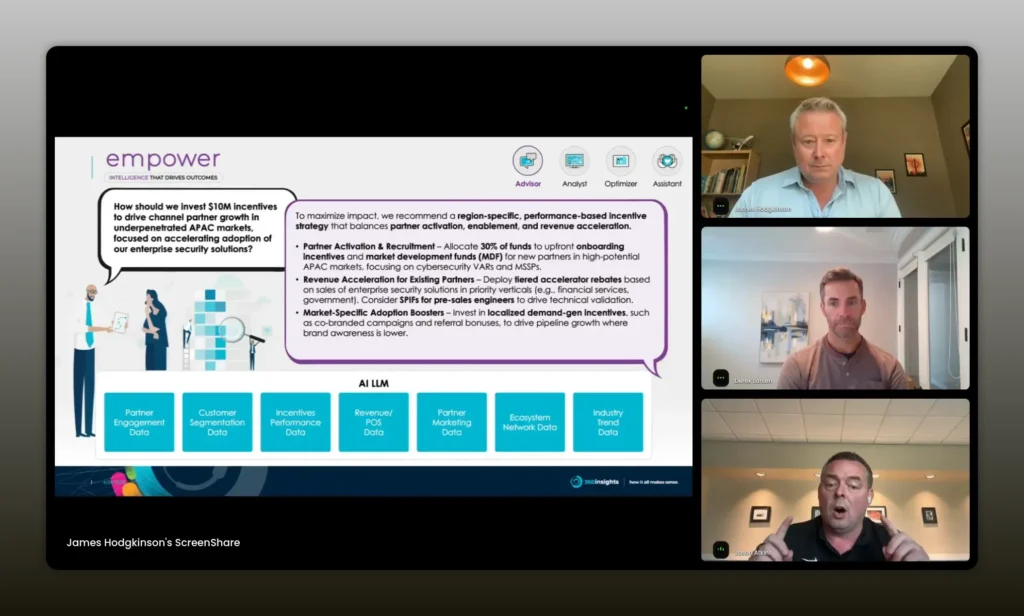

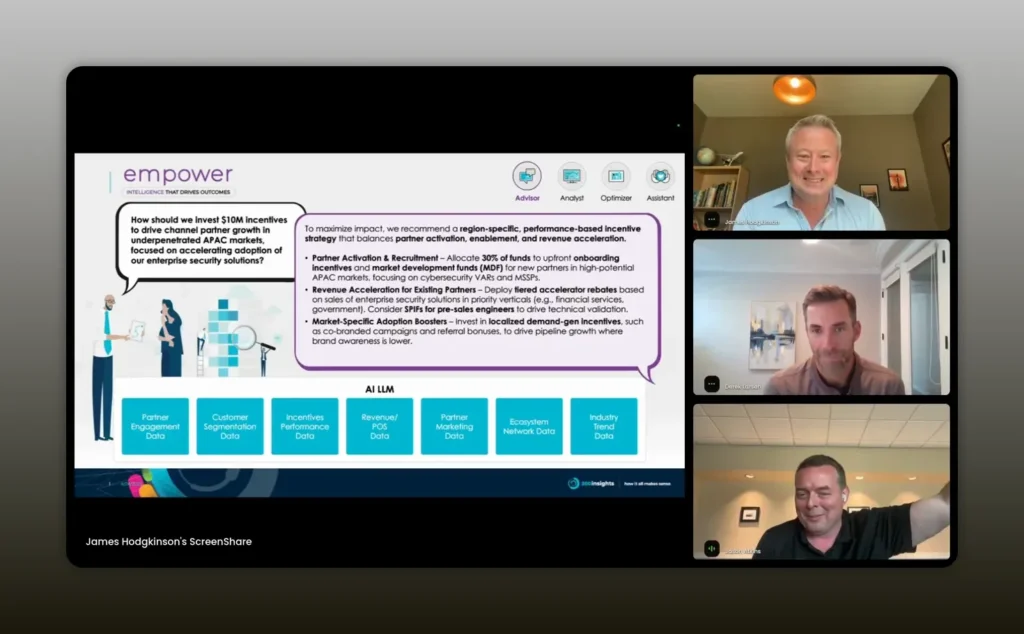

Jason Atkins painted a future where conversational agents become the primary interface to run partner programs. Rather than manually logging into systems to configure MDF, launch campaigns, or approve rebates, you will tell an agent what you want and it will execute across systems.

Agents will recommend actions and even execute them with human approval — the AI will be your operational co-pilot -Jason Atkins

But this future rests on a few non-negotiables:

- High-quality canonical data: garbage in, garbage out. LLM-driven recommendations depend on the trustworthiness and completeness of your canonical model.

- Explainability: people will not accept automated actions unless they understand why they are recommended. Provide traceable decision paths.

- Safe guardrails: limit agent actions to predefined playbooks and require human consent for high-risk executions.

- Continuous monitoring: monitor outcomes and model drift to ensure recommendations stay valid as markets and partners evolve.

Suggested timeline

- 0-3 months: Build canonical schema, identify primary data sources, and instrument partner health signals.

- 3-9 months: Deploy combined dashboards and automated alerts; begin small agent pilots for low-risk tasks such as report generation and communications.

- 9-18 months: Expand agent responsibilities to configuration tasks with human confirmation; implement explainability layers.

- 18+ months: Move toward agent-assisted execution with audited automation for routine program operations while retaining human-in-the-loop for strategic changes.

This progression lets you harvest immediate business value while mitigating operational risk as you scale AI-driven orchestration.

How to start: an actionable 90-day plan

Getting started is about focusing on the few things that unlock the rest. Follow this 90-day plan to create momentum.

- Inventory: catalog all partner-related data sources and owners. Include PRM, CRM, LMS, MDF ledger, incentives platform, and any third-party sources like Crossbeam.

- Define canonical model: create an initial semantic layer that maps key entities and metrics. Prioritize partner, opportunity, deal status, program, tier, and partner health indicators.

- Pick one use case: choose a high ROI problem such as conflict-prone deal registrations, MDF attribution, or partner onboarding completion.

- Deploy measurement: implement Partner Score micro-feedback for the chosen use case and feed it into your canonical model.

- Create one dashboard: assemble data from multiple sources into a single, persona-driven dashboard and expose it to partner managers.

- Operationalize alerting: build a small set of automated alerts tied to playbooks for partner managers.

- Run a pilot: execute the pilot with a small group of partners and iterate based on real-world feedback.

Deliverables after 90 days

- Canonical schema and data map

- Live partner score stream for the pilot cohort

- Persona-driven dashboard for partner managers

- Operational playbooks and alerts

Common pitfalls and how to avoid them

Many organizations stumble when they treat data as a project instead of a product. The following pitfalls are recurring and avoidable.

- Pitfall: Siloed ownership of partner data.

- Fix: create a cross-functional data stewardship council.

- Pitfall: Trying to migrate all data before showing value.

- Fix: stitch and expose rather than moving everything at once.

- Pitfall: Overly complex feedback surveys.

- Fix: embed micro-feedback and surface insights immediately.

- Pitfall: No governance around AI actions.

- Fix: define playbooks and a safe execution boundary for agents.

- Pitfall: Ignoring partner experience.

- Fix: give partners transparent access to their own metrics and next steps.

Metrics that matter for ecosystem performance

Moving beyond vanity metrics requires a mix of behavioral, financial, and relationship indicators. Below are core metrics to track:

- Partner Health Score: composite of micro-feedback scores across commitment, communication, and conflict.

- Partner Activation Rate: percent of partners completing onboarding and baseline competencies.

- Deal Velocity: average time from registration to close for partner-led deals.

- MDF ROI: incremental revenue attributed to MDF spend divided by MDF cost.

- Pipeline Coverage: partner-generated pipeline as a percent of target across segments.

- Engagement to Revenue Conversion: rate at which partner engagement activities translate into bookings.

These metrics become exponentially more useful when combined in layered dashboards and used as inputs for agent recommendations.

Security, privacy, and trust in ecosystem data

Orchestrating ecosystem intelligence requires a careful approach to data protection. You will be combining proprietary partner signals, commercial information, and often PII. Follow these principles:

- Least privilege access to canonical datasets and APIs

- Encryption in transit and at rest

- Audit logging for all automated actions and agent recommendations

- Clear data-sharing agreements with partners and vendors

- Model governance and explainability to satisfy compliance reviews

Trust is also social. Expose partner-level dashboards and explain how data is used. When partners see the direct business value, they will be more willing to provide richer signals.

Scaling partner experiences across geographies and languages

One of the surprising wins organizations see is the multiplier effect of scaling partner-facing resources globally. But that requires three capabilities:

- Multi-language feedback capture and AI categorization

- Localized program rules that respect regional market dynamics

- Consistent metrics and dashboards with region-specific filters

David Ward mentioned that Partner Score runs in 50 countries and handles multiple languages. That global reach only works because the feedback is structured and AI is applied to surface themes at the aggregate level while preserving local nuance.

Integration checklist for your tech stack

Before you build or buy, verify the following items for each system you plan to integrate:

- Access to a stable API or secure data export

- Field mapping for canonical model entities

- Data freshness guarantees

- Lineage metadata to trace where metrics came from

- Permissioning that respects partner confidentiality

Vendor and partner playbook: roles and responsibilities

To make orchestration practical, clarify who owns what. A typical playbook includes:

- Partner Manager: owns relationship health and intervention playbooks

- Program Ops: owns MDF rules, incentives setup, and program configuration

- Data Stewards: own canonical model, ETL cadence, and data quality

- AI/Analytics: owns models that generate recommendations and monitor model drift

- Security/Legal: owns data sharing agreements and audits

Make these roles explicit so your ecosystem behaves like a product organization instead of a project-based collaboration.

FAQs

What is partner-led proprietary data and why is it important?

Partner-led proprietary data includes signals produced by partners such as deal registration details, MDF usage, enablement completion, market feedback, and micro-feedback on relationships. This data is important because it captures real-world, first-party behaviors and sentiments that are unique to your ecosystem and are powerful inputs for AI models and orchestration logic.

How often should I collect partner feedback?

Frequent, contextual micro-feedback is better than infrequent long surveys. Embed short rating prompts at meaningful moments: onboarding completion, deal close, MDF execution, technical enablement. The objective is to create a continuous stream of signals that can feed real-time scorecards and alerts.

Can I integrate existing dashboards and BI tools without migrating all my data?

Yes. The recommended approach is to create a semantic layer and use repeatable integration patterns that allow data to remain where it provides the best value while exposing transformed views for orchestration and dashboards. Tools like Snowflake, dbt, and secure APIs make this feasible.

What governance is needed before enabling AI-driven actions?

You need clear playbooks, human-in-the-loop checkpoints for strategic actions, explainability layers that justify recommendations, audit trails for automated steps, and role-based permissions. Start with low-risk automations and expand responsibilities as trust builds.

How do I prioritize which use case to tackle first?

Choose a use case that balances business impact and data readiness. Good early wins are escalating conflict in deal registrations, improving MDF ROI attribution, and increasing partner onboarding completions. These are measurable and have clear intervention playbooks.

How long will it take to get AI agents to execute tasks autonomously?

Expect a phased timeline. Within months you can have agent-assisted reporting and recommendations. Within 9-18 months you can safely expand agents to execute lower-risk operational tasks under human approval. Full autonomous execution across strategic workflows will take longer and requires mature data, governance, and monitoring.

Conclusion

Orchestrating an ecosystem is a systems problem that blends people, processes, and technology. The practical path forward is clear: standardize your data contracts, instrument partner relationships with continuous feedback, stitch data where it lives, and build composable experiences that deliver value to partners and internal stakeholders. As you mature, deploy AI to recommend and then to execute with guardrails. The result will be a resilient, scalable ecosystem where partner intelligence becomes your strategic edge.